This blog covers a work around to configuring SharePoint 2010 to use SQL Authentication to allow a SharePoint WFE to consume SQL Server resources from an untrusted domain. This scenario is common for Extranet and SharePoint for Internet implementations as it isolates the Data Layer into a separate network stack for security reasons; typically to mitigate risk of a compromised web front providing the intruder with direct access to an internal network.

The conceptual topology of this deployment looks as follows:

This diagram also includes a UAG to provide access for external users and ADFS to allow single sign on for internal users against their domain accounts but are not discussed further in this blog.

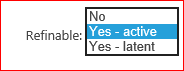

Although a number of blogs have been published on the configuration of SharePoint 2010 to use SQL Authentication using PowerShell scripts, they do not include the configuration of the services such as Managed Meta Data, Search, Excel etc that you would have in a SharePoint for Internet or Server deployment. If you attempt to configure the services through PowerShell including the SQL Authentication parameters, the provisioning code attempts to add the service domain account to SQL Server even though it is from brief testing not required and prevents the configuration of the service in a untrusted domain.

Note that in SharePoint 2007 this type of configuration worked without any issues

I’m not going to cover the provisioning of SharePoint configuration database and other basic installation steps as these are well covered in this and related blogs although I would create a SQL Server Alias using the cliconfg tool to point to the non-standard SQL Port:

http://blogs.technet.com/b/surama/archive/2010/03/17/sharepoint-2010-configuration-with-powershell-and-untrusted-sql-domain-sql-authentication.aspx

To configure the services you need to follow these basic steps:

- Configure your network as in the conceptual topology above. Ensure you only allow the SQL Server TCP IP port through the firewall (I also recommend using a non-standard port i.e. not 1433)

- Follow Sundar Ramakrishnan blog in link above to get the basic installation of SharePoint up and running and Central Administration site in place

- Configure the firewall to allow the ports required for a domain trust.

- Create a Trust between the DMZ domain and LAN network domain

- Configure all the SharePoint 2010 Services. I would recommend doing this in a script so you can run it and confirm the services were created in a short time window to minimise risk of attack whilst firewall is open.

- Close firewall ports opened to all the domain trusts.

- Test all the services are operational

We have found that the Web Analytics does not work in this scenario as the PowerShell command has no SQL Authentication parameter. We haven’t done exhaustive testing yet but initial creation of Web Applications and sites are functioning correctly.

Example scripts to use for provisioning some of the services. These are provided as reference so please create your own and not copy these. Use the get-help PowerShell command to get the exact parameters as some services require DatabaseCredentials as a PSCredential object and others use SQL login and password parameters (nothing like consistency):

Create PS credential object to store database SQL account login details (Example use same credentials but you should use different ones for each service)

$dbCredentials = New-Object –typename System.Management.Automation.PSCredential –argumentlist "s_SPExtranetFarm", (ConvertTo-secureString "Password" –AsPlainText –Force)

State Service:

$serviceApp=New-SPStateServiceApplication -Name "State Service"

New-SPStateServiceDatabase -Name "State_Service_DB" -DatabaseCredentials $dbcredentials -ServiceApplication $serviceApp

New-SPStateServiceApplicationProxy -Name "State Service Proxy" -ServiceApplication $serviceApp -DefaultProxyGroup

Session Service:

Enable-SPSessionStateService -DatabaseName "Session_State_Service_DB" -DatabaseCredentials $dbcredentials

Usage Service:

New-SPUsageApplication -Name "Usage and Health Data Collection Service Application" -DatabaseName "Usage_and_Health_Data_DB" -DatabaseCredentials $dbcredentials

Business Data Connectivity Services:

do {

$serviceApplicationPoolAccount=Read-Host "Account for application pool (domain\username)"

} until (Validate-AccountName($serviceApplicationPoolAccount) -eq $true)

$dbCredentials = New-Object –typename System.Management.Automation.PSCredential –argumentlist "s_SPExtranetBCS", (ConvertTo-secureString "password" –AsPlainText –Force)

New-SPServiceApplicationPool -Name "Business_Data_Connectivity_Service_Application_Pool" -Account "$serviceApplicationPoolAccount"

New-SPBusinessDataCatalogServiceApplication -Name "Business Data Connectivity Service Application" -ApplicationPool "Business_Data_Connectivity_Service_Application_Pool " -DatabaseServer "SP_SQL" -DatabaseName "Business_Data_Connectivity_Service_DB" -DatabaseCredentials $dbcredentials

I have a support call with Microsoft on this issue and it is a known problem. The product team are deciding whether to fix it. I’ve expressed my opinion strongly as it worked fine in SharePoint 2007 and I wouldn’t want to deploy SharePoint with a domain trust. There other recommended workaround is to put the SQL Server in the DMZ domain whilst configuring services and then move it back to the LAN but I don’t think SQL Server would be too happy with that move but less risk as the firewall is never opened up.